The Scaling Laws Illusion: Curve Fitting, Billion-Dollar Funding, and The Great AI Funding Heist

How OpenAI Sold Wall Street a Math Trick

It started with a simple premise: Just scale.

Scale your models. Scale your data. Scale your compute. And intelligence will inevitably emerge.

This wasn’t just a theory - it was presented as a law. The Neural Scaling Laws (Scaling Laws for Neural Language Models). The Chinchilla Scaling Laws (Training Compute-Optimal Large Language Models). Papers published by OpenAI and DeepMind made it sound as immutable as gravity.

For context, scaling laws claimed that increasing compute, data, and model size in predictable proportions would lead to steady improvements in AI capabilities.

And Investors ate it up. After all, who wouldn't invest in a mathematical law as reliable as gravity?

Billions flowed in, poured into GPUs, data centers, and research teams. The pitch was simple: "Just keep scaling."

Tech giants raced to hoard GPUs like gold bars in a digital arms race. The only bottleneck was infrastructure. Just keep building, and AGI was inevitable.

But what happens when the law stops working?

The Getaway - When They Knew It Was A Lie

For years, OpenAI and others rode the scaling train to historic funding rounds. Then, suddenly, something changed:

The models weren’t improving at the same rate.

Costs were spiraling out of control.

The hardware demands were becoming unsustainable.

And then, the pivot.

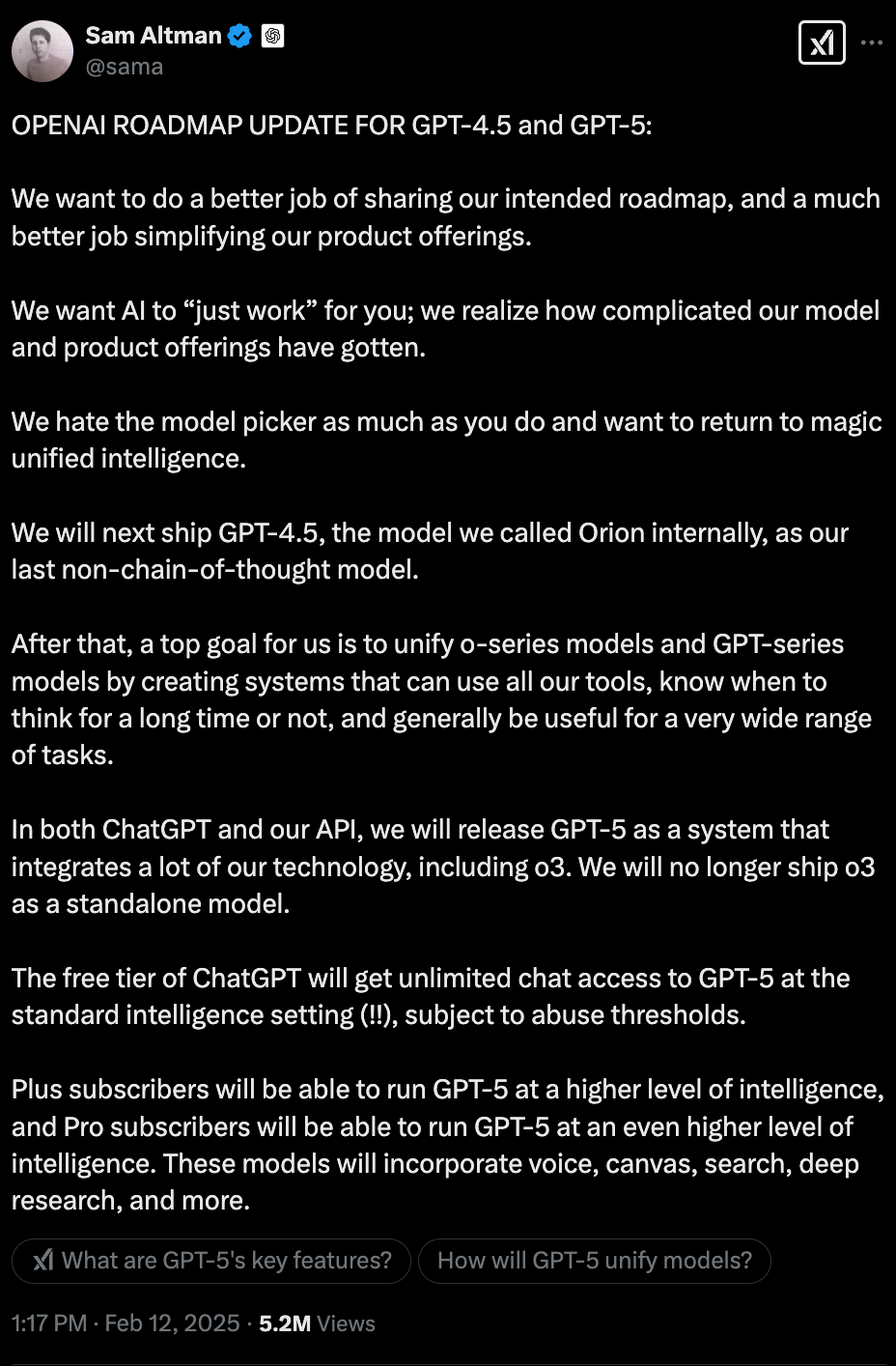

Gone is the talk of “just keep scaling.”

Now it is all about UX.

Now it is about AI experiences.

Now it is about efficiency.

The same OpenAI that pitched billion-dollar investors on mathematical laws is now abandoning those laws in real time.

And the best part? They never even have to admit it.

If scaling laws were scientifically valid, OpenAI wouldn’t be pivoting to UX - it would be doubling down on proving these ‘laws’ through continued scaling. Instead, they’re abandoning the very mathematical foundation they used to raise billions of dollars in capital.

The fact that they are pivoting to UX proves scaling laws were never laws, just temporary correlations that fell apart when tested at scale.

This isn’t a “second era of scaling” - it’s a rebranding of failure. We’re not watching scientific progress; we’re watching a funding narrative get rewritten as a UX strategy.

The Cover-Up - Rewriting The Narrative

Here’s how you rewrite history without anyone noticing:

Scaling laws aren’t failing… they’re "maturing."

Diminishing returns aren’t a problem… they’re "a second era of scaling."

We’re not pivoting away from our original thesis… we’re "evolving."

No refunds. No accountability. Just another round of funding.

The brilliance of this heist isn’t just that they just pulled it off - it’s that they turned the failure into a feature.

Like a magician's misdirection, they want you to watch the UX transformation while forgetting about the billions raised on 'mathematical laws' that suddenly stopped working.

The magician’s misdirection worked. Scaling laws framed as immutable truths led to billions in funding, a global AI arms race, and the belief that intelligence was just a matter of more compute.

But here’s the twist:

The Chinchilla Scaling Laws, which claimed that GPT-3 was undertrained, contradicted the earlier Neural Scaling Laws OpenAI used to justify their approach.

Now, new research emerging from other AI labs suggests efficiency, not raw scale, is the dominant factor in performance improvements. If that’s the case, then Chinchilla was also wrong.

OpenAI isn’t just pivoting away from scaling laws—they’re contradicting the very research that once propped them up.

If scaling laws were truly valid, OpenAI wouldn’t be shifting its narrative to UX. They wouldn’t be talking about efficiency and “unified intelligence.” They’d be doubling down on proving their laws through more scaling.

Instead, they’re quietly moving on.

Because scaling laws weren’t laws at all.

They were just curve-fits that worked until they didn’t.

The Question No One Wants to Ask

The industry spent billions on a flawed premise.

And now, they want you to believe that it was always about UX, not scale.

So, if scaling laws were just an artifact of available data and hardware at the time, dressed up as fundamental truths…

💡 What else about AI is built on a house of cards? 🤔

Do you need billions to compete with OpenAI?

Does OpenAI truly have a moat?

If these 'laws' were just temporary empirical trends derived from curve-fitting on carefully selected data points to raise billions in funding, what's next? 🤔

If the fundamental 'laws' that justified billions in investment were just curve-fitting exercises... what happens when investors start asking for their money back? 🤔